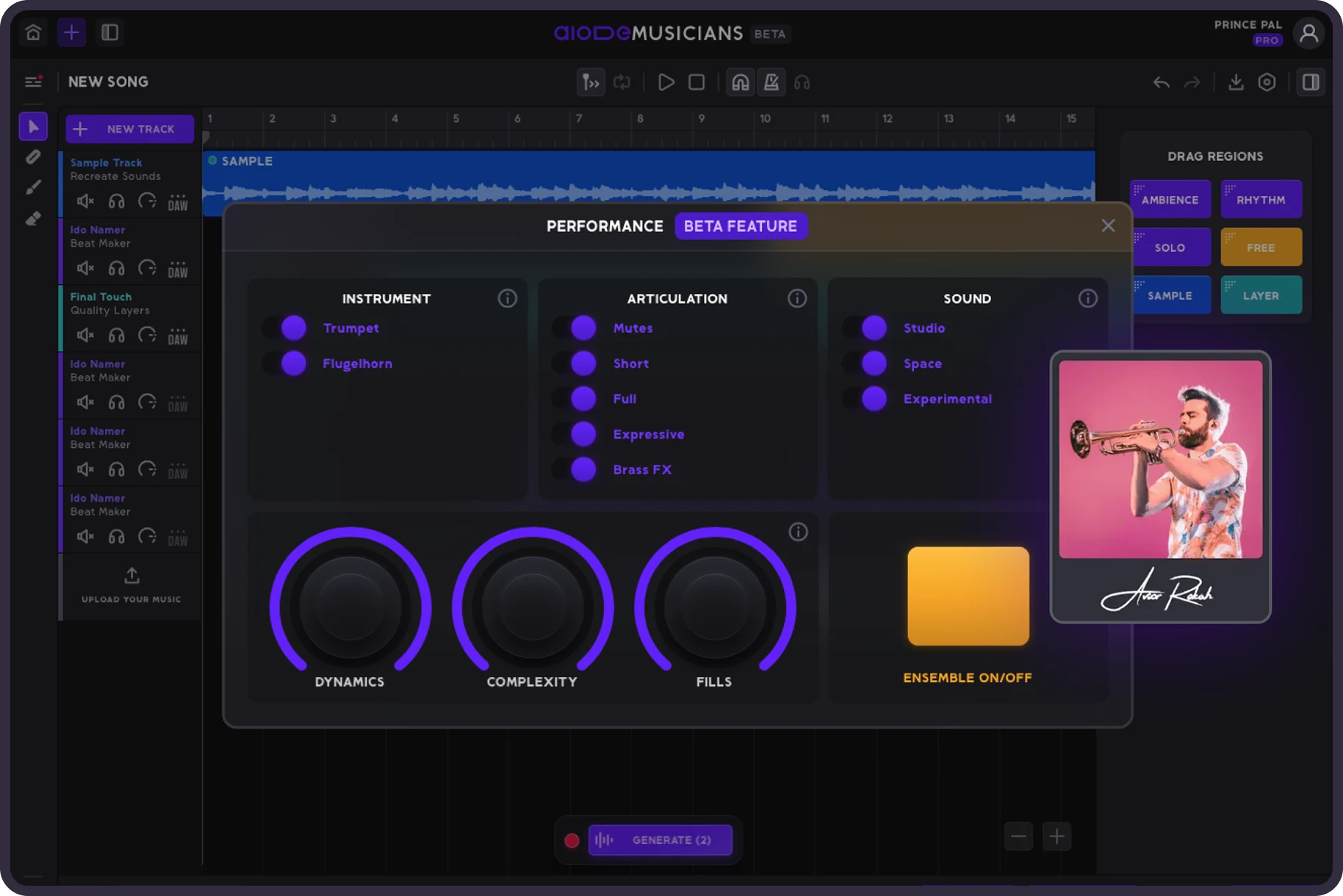

The music creation landscape has shifted significantly over the last few years. In 2026, Artificial Intelligence (AI) integration within Digital Audio Workstations (DAWs) is no further a cutting-edge concept—it's the industry standard. From bedroom producers to top-tier business technicians, builders are leveraging equipment understanding how to streamline workflows, improve creativity, and produce professional-grade sound with unprecedented speed.

This is a breakdown of how Ai music creator instruments are reshaping a this year.

What percentage of suppliers are utilizing AI in 2026?

New business surveys show that nearly 78% of music producers now employ some form of AI-assisted tool inside their major Ai daw.This can be a significant leap from 2023, where usage charges were closer to 30%. The engineering has transferred beyond easy novelty jacks to become essential structure within documenting software.

How is AI improving mixing and learning efficiency?

Probably the most concrete impact of AI in 2026 is observed in the post-production phase.

Automated Managing: New formulas can now analyze a natural multi-track session and suggest a fixed mix stability in under 30 seconds. That preserves designers hours of manual fader riding.

Clever EQ and Retention: AI methods analyze the spectral content of a course against genre-specific benchmarks. They use helpful EQ and compression options quickly, giving a finished starting place that technicians may then fine-tune.

Mastering Detail: AI learning suites have sophisticated to the level wherever they could match the volume and tonal stability of a reference track with 95% reliability, allowing separate musicians to achieve radio-ready quality without an enormous budget.

May AI create musical a few ideas from scratch?

Yes, generative AI has turned into a powerful collaborator rather than just a utility.

Melody and Note Technology: Companies struggling with writer's stop is now able to ask their DAW to generate note progressions or song lines based on a specific temper or scale.

Style Synthesis: The quality of synthesized lines has achieved an amount of realism wherever it is often indistinguishable from human recording. This enables producers to create manual words as well as final paths without wanting a session singer.

Is AI exchanging human imagination?

Despite fears of automation exchanging artists, the prevailing development in 2026 suggests the opposite. AI is functioning as a power multiplier for human creativity. By managing complex tasks—like noise decrease, stem divorce, and Audio quantization—AI opens up producers to concentrate on the psychological and artistic areas of a song.

The position of the maker is changing from a specialized driver to a creative director. The equipment handle the "how," while the individual decides the "what" and "why."

What does the future maintain for AI in music?

Once we look toward the latter half of the decade, we be prepared to see actually greater integration. We assume DAWs that could realize complex organic language directions, allowing consumers to combine a tune by simply verbally describing the noise they want. The buffer to entry for music manufacturing will continue to lower, democratizing the artwork kind and letting more voices to be noticed than actually before.